Bayesian Deep Learning & Computer Vision

Why Should I Care About Uncertainty?

If you give me several pictures of cats and dogs – and then you ask me to classify a new cat photo – I should return a prediction with rather high confidence. But if you give me a photo of an ostrich and force my hand to decide if it's a cat or a dog – I better return a prediction with very low confidence.

Understanding what a model does not know is a critical part of many machine learning systems. Today, deep learning algorithms are able to learn powerful representations which can map high dimensional data to an array of outputs. However these mappings are often taken blindly and assumed to be accurate, which is not always the case. In two recent examples this has had disastrous consequences. In May 2016 there was the first fatality from an assisted driving system, caused by the perception system confusing the white side of a trailer for bright sky [1].

Types of Uncertainty

The first question I’d like to address is what is uncertainty? There are actually different types of uncertainty and we need to understand which types are required for different applications. I’m going to discuss the two most important types – epistemic and aleatoric uncertainty.

Epistemic uncertainty

Epistemic uncertainty captures our ignorance about which model generated our collected data. This uncertainty can be explained away given enough data, and is often referred to as model uncertainty. Epistemic uncertainty is really important to model for:

- Safety-critical applications, because epistemic uncertainty is required to understand examples which are different from training data,

- Small datasets where the training data is sparse.

Aleatoric uncertainty

Aleatoric uncertainty captures our uncertainty with respect to information which our data cannot explain. For example, aleatoric uncertainty in images can be attributed to occlusions (because cameras can’t see through objects) or lack of visual features or over-exposed regions of an image, etc. It can be explained away with the ability to observe all explanatory variables with increasing precision. Aleatoric uncertainty is very important to model for:

- Large data situations, where epistemic uncertainty is mostly explained away,

- Real-time applications, because we can form aleatoric models as a deterministic function of the input data, without expensive Monte Carlo sampling

How do we do it then?

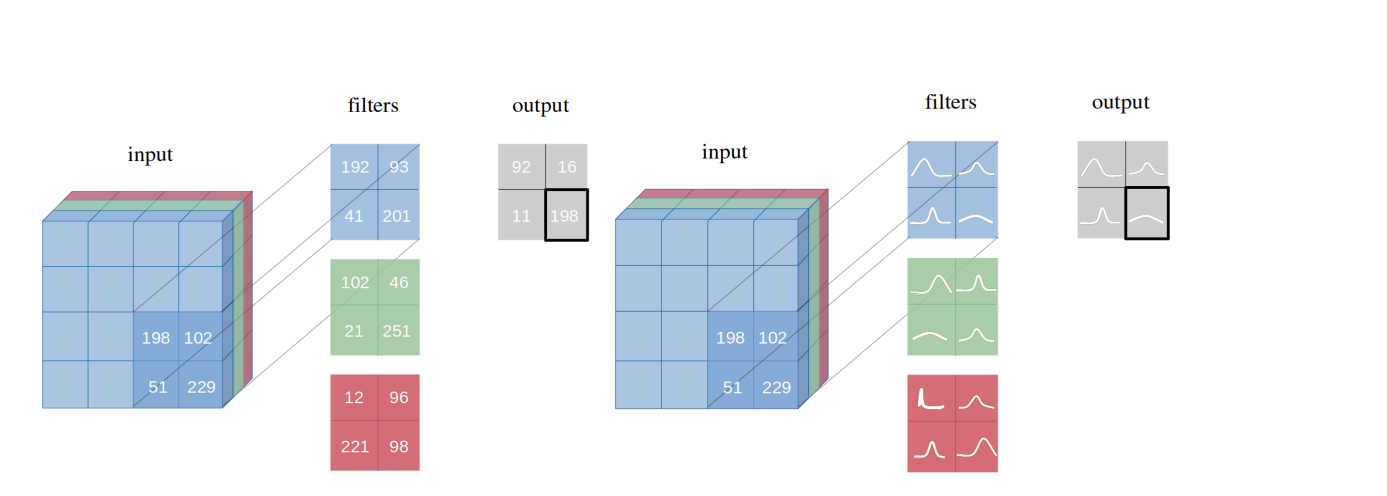

There are many ways to build the Bayesian neural networks (we will ponder over a lot of them in Blog 3). However, in this series, we will focus on building a Bayesian CNN using Bayes by Backprop. The exact Bayesian inference on the weights of a neural network is intractable as the number of parameters is very large and the functional form of a neural network does not lend itself to exact integration. So, we approximate the intractable true posterior probability distributions p(w|D) with variational probability distributions q_θ(w|D), which comprise the properties of Gaussian distributions μ ∈ ℝ^d and σ ∈ ℝ^d, denoted N(θ|μ, σ²), where d is the total number of parameters defining a probability distribution. The shape of these Gaussian variational posterior probability distributions, determined by their variance σ², expresses an uncertainty estimation of every model parameter

Uncertainty for multi-task learning

Next I’m going to discuss an interesting application of these ideas for multi-task learning. Multi-task learning aims to improve learning efficiency and prediction accuracy by learning multiple objectives from a shared representation. It is prevalent in many areas of machine learning, from NLP to speech recognition to computer vision. Multi-task learning is of crucial importance in systems where long computation run-time is prohibitive, such as the ones used in robotics. Combining all tasks into a single model reduces computation and allows these systems to run in real-time. Most multi-task models train on different tasks using a weighted sum of the losses. However, the performance of these models is strongly dependent on the relative weighting between each task’s loss. Tuning these weights by hand is a difficult and expensive process, making multi-task learning prohibitive in practice.